Authors Célian Glénaz, Dahmun Goudarzi, Julio Loayza Meneses

Category Cryptography

Tags differential-fuzzing, cryptography, vulnerability, tool 2025

Following the introduction of crypto-condor and differential fuzzing in earlier blogposts, we showcase a use case where Quarsklab's automated test suite for cryptographic implementations allowed us to improve the reference implementation of the recently standardized HQC scheme.

Introduction

In March 2025, NIST decided to standardize HQC as the last key encapsulation mechanism (KEM) (and fifth standard) for post-quantum algorithms. This algorithm is based on error-correction codes, a mathematical problem that has served as the basis of cryptographic constructions for more than half a century. It will serve as a backup to the already standardized ML-KEM (formerly known as Kyber). In this blogpost, we give a high-level explanation on how HQC works and how you can do automatic testing of implementations of this standard with internal test suits. This allowed us to find two critical bugs in the last version of HQC's reference implementations (right before its standardization) which led to CVE-2024-54137.

Hamming Quasi-Cyclic (HQC)

HQC is a key encapsulation mechanism (KEM) based on code theory, and more particularly on the hardness of the well-known syndrome decoding problem in the specific case of structured codes. As with many KEMs, it is built as the combination of a public-key encryption scheme and the HHK transformation. If you want more details about post-quantum KEM, we invite you to read this very insightful blogpost from Cloudflare.

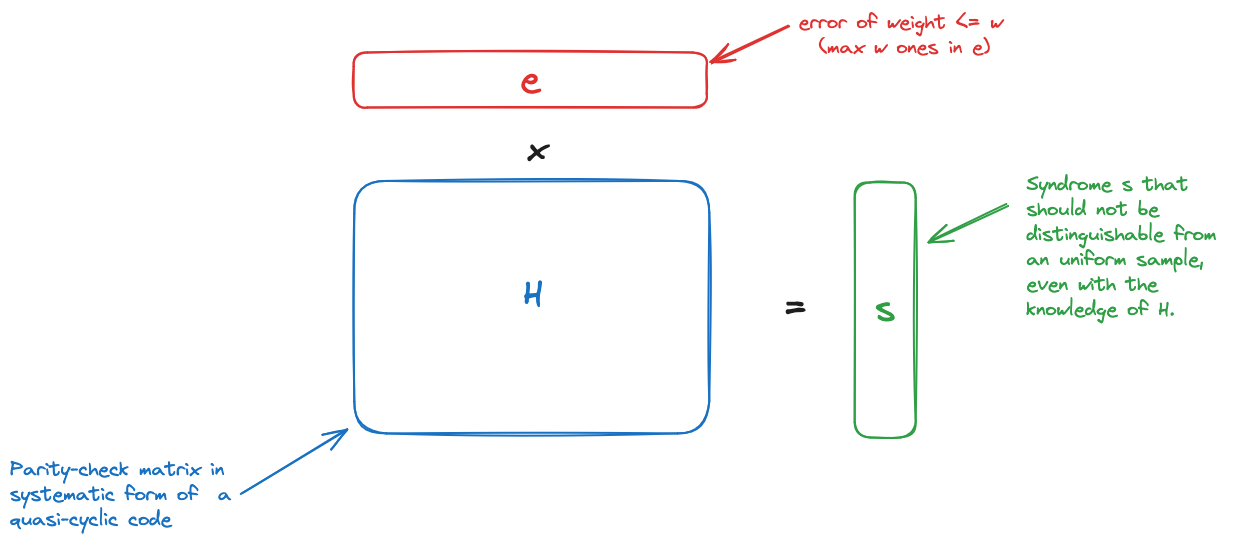

High-level vulgarisation of the Syndrome Decoding problem in HQC context.

KEMs are composed of three algorithms:

KeyGen: a key generation algorithm that computes the key pair composed of a public key and private key, from the scheme parameters and setup.Encapsulate: an encapsulation algorithm that takes a public key as input, generates a shared secret, and encrypts it into a ciphertext.Decapsulate: a decapsulation algorithm that decrypts the ciphertext with the secret key and recovers the shared secret.

According to NIST, one of the main advantages of HQC compared to other code-based schemes is the precise decryption failure analysis provided by the authors.

Automation of Conformity and Resilience Testing

At Quarkslab, to properly evaluate cryptographic implementations, we developed two tools: one for conformity checks and another for resilience testing. These tools are part of a broader toolchain that allows us to automate high-assurance cryptography implementation assessments by testing functional correctness, memory safety, and constant-time execution.

Test Vectors with crypto-condor

crypto-condor's logo

crypto-condor is an open-source tool for conformity checks. To find out more, please refer to this blogpost by Julio Loayza Meneses. Its latest release includes test vectors for the four previous PQC standards and the freshly standardized HQC.

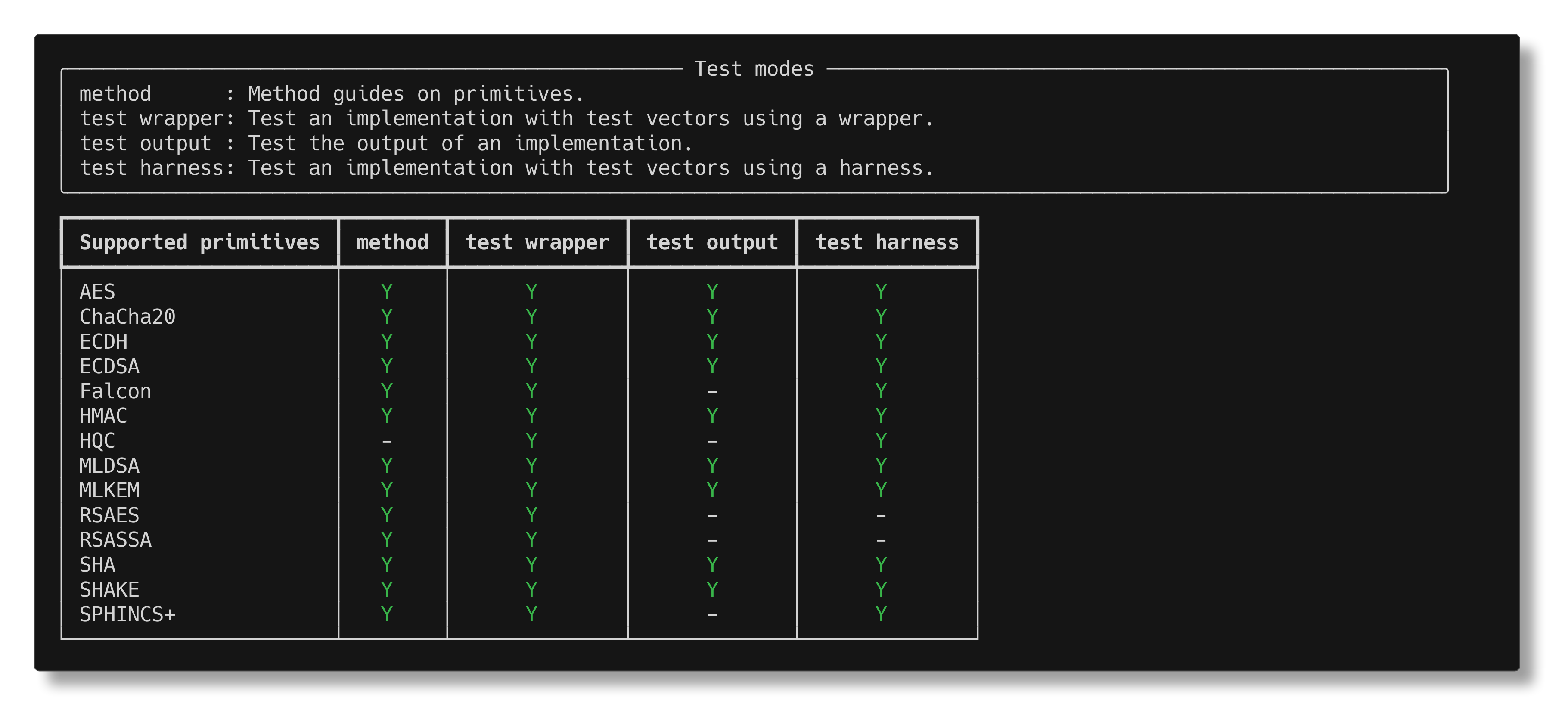

List of primitives supported by crypto-condor

Implementations of the PQC standards can be tested with I/O or through a harness, depending on whether you are in possession of the source code or a binary.

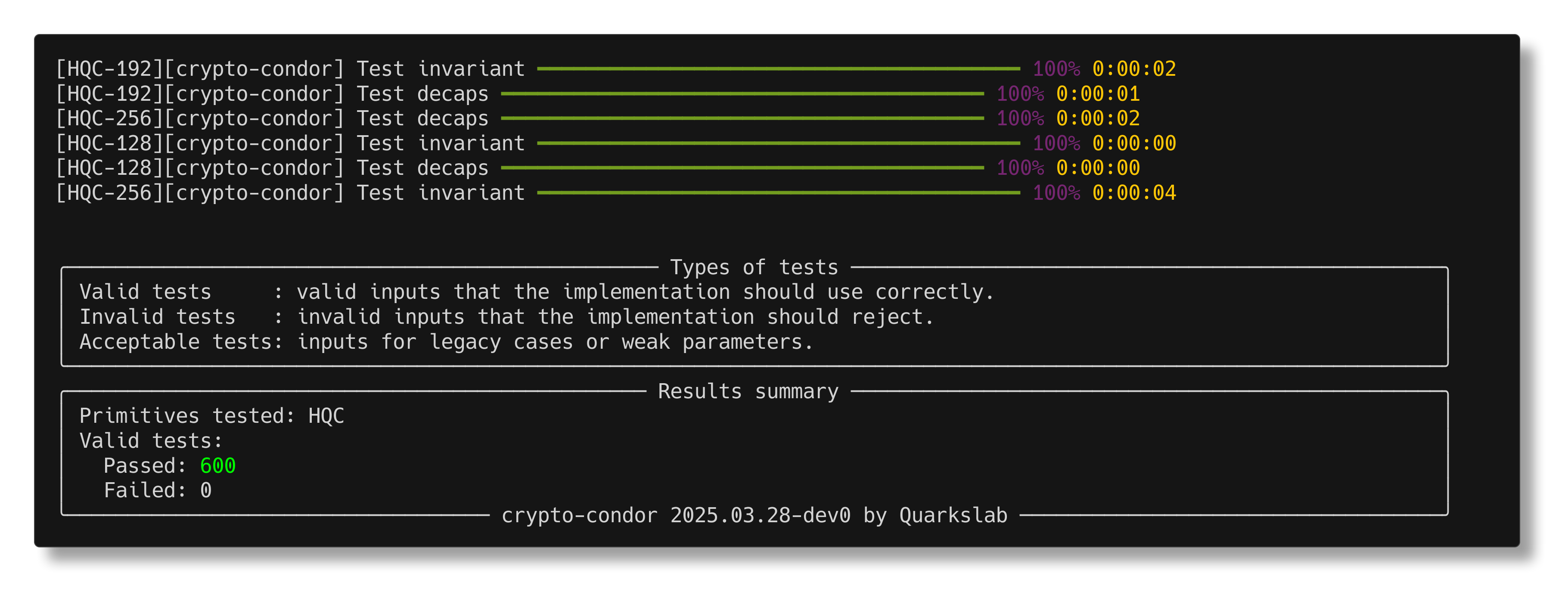

Testing HQC through a crypto-condor harness

HQC Decapsulation Non-Correctness detected with DeltAFLy

DeltAFLy's logo

DeltAFLy is a closed-source tool developed by Quarkslab to assess in-depth functional correctness (for edge cases not covered with test vectors), memory safety, and constant-time behavior of cryptographic implementations, based on differential fuzzing. To find out more, please refer to this blogpost by Célian Glénaz.

During one of our fuzzing campaigns, which targeted LibOQS, we discovered an inconsistency in the HQC decapsulation function. We detail hereafter the nature of the issue, which came from two bugs present in the reference implementation of HQC (prior to the February 19, 2025 version).

Location

The bug was situated in the decapsulation function in the file kem.c of the three projects: HQC reference implementation, PQClean, and LibOQS (see here for the reference implementation of HQC). This is because PQClean followed the implementation spec of HQC, and LibOQS core functions are built with PQClean.

Impact

The public key is wrongly extracted from the secret key in the decapsulation function, which leads to private parameters being stored inside public variables.

The bug in the implementation impacts the correctness of the system as a whole, under the definitions provided in their security analysis. In fact, the decapsulation result is returned for invalid inputs despite HQC providing implicit rejection. This is due to the presence of two successive bugs, canceling each other out in the results computation. This means that providing any ciphertext to a server will result in a successful KE protocol.

/**

* @brief Parse a secret key into a string

*

* The secret key is composed of the seed used to generate vectors <b>x</b> and <b>y</b>.

* As technicality, the public key is appended to the secret key in order to respect NIST API.

*

* @param[out] sk String containing the secret key

* @param[in] sk_seed Seed used to generate the secret key

* @param[in] sigma String used in HHK transform

* @param[in] pk String containing the public key

*/

void PQCLEAN_HQC256_CLEAN_hqc_secret_key_to_string(uint8_t *sk, const uint8_t *sk_seed, const uint8_t *sigma, const uint8_t *pk) {

memcpy(sk, sk_seed, SEED_BYTES);

memcpy(sk + SEED_BYTES, sigma, VEC_K_SIZE_BYTES);

memcpy(sk + SEED_BYTES + VEC_K_SIZE_BYTES, pk, PUBLIC_KEY_BYTES);

}

In the above code snippet, we can see that the secret key object sk is constructed as the concatenation of:

seed: a seed of sizeSEED_BYTES;sigma: a vector of sizeVEC_K_SIZE_BYTES;pk: the public key of sizePUBLIC_KEY_BYTES.

However, in the decapsulation function, the public key is incorrectly extracted from the sigma part as follows:

const uint8_t *pk = sk + SEED_BYTES;

We can easily compare the values of the two public keys and see that they differ.

pk used for kem_encaps:bdec9d8b9d3e1aef44f4933c50ad0c60b13bf36eaa7e52fcca5ef00030fb7685d90a521f43c70ac877ef1372197b0a088b66

pk used in kem_decaps:ec131533ed119f595cf6356dd6f162b4489c1093400f73682c13773825c6b298bdec9d8b9d3e1aef44f4933c50ad0c60b13b

This means that it is not possible for the decapsulation to reconstruct a sound ciphertext c' that is equal to the input one c.

However, since the boolean result, used as a mask for the return value, is wrongfully constructed, the output will always indicate success, as shown below:

// Check if c != c'

result |= PQCLEAN_HQC128_CLEAN_vect_compare((uint8_t *)u, (uint8_t *)u2, VEC_N_SIZE_BYTES);

result |= PQCLEAN_HQC128_CLEAN_vect_compare((uint8_t *)v, (uint8_t *)v2, VEC_N1N2_SIZE_BYTES);

result = (uint8_t) (-((int16_t) result) >> 15);

for (size_t i = 0; i < VEC_K_SIZE_BYTES; ++i) {

mc[i] = (m[i] & result) ^ (sigma[i] & ~result);

}

This bug was reported in December 2024 to LibOQS, and fixed by HQC authors in February 2025 (see here).

Conclusion

The introduction of new cryptographic standards due to the emergence of quantum threats brings a variety of new implementations. These source codes need to be of high assurance to ensure a smooth transition from classical cryptography to post-quantum cryptography, while maintaining the same level of security and trust.

At Quarkslab, we developed a test suite to automate the high-assurance assessment of cryptographic implementations. In this blog post, we demonstrate how our tools, such as crypto-condor and DeltAFLy, are important in detecting bugs and misbehavior in cryptographic implementations, such as the bug in the reference implementation of HQC that led to CVE-2024-54137.

Additionally, we found issues in libraries implementing post-quantum primitives (a constant-time issue and several bugs in the Java HQC implementation of Bouncy Castle), discovered through differential fuzzing between LibOQS and Bouncy Castle. Their reporting can be found here.

These bugs, and how they propagated to other implementations, show that it is extremely important to have experts audit reference implementations. This is not exclusive to the new post-quantum algorithms, that do not benefit yet from years of scrutiny like classic algorithms such as AES. For example, two years ago, we discovered vulnerabilities in the TPM 2.0 reference implementation code.