A retrospective on the 3 past years of development and an introduction to the future of Irma, our File Analysis Solution.

Introduction

Irma is our file security analysis software, originally developed as an open source project with the sponsorship of 5 major European organizations, it is now also one of Quarkslab's commercial software products.

Since our last post from February 2016, Irma evolved significantly. This post summarizes the major changes, the internal ones like the software stack changes or the security improvements, and the most visible ones: the new features.

Software stack

Time for a hug

While we moved fast on adding new features for users till v1.3, we added a lot of technical debt. At one point, if the project wants to continue evolving, there are no surprises, you have to deal with this debt.

While it is less sexy for an end user, it gives back confidence to the developer that the code will be able to adapt to yet-not-known new ideas.

Among those changes, let me pick some and try to explain their motivations. From Irma day 1, all frameworks were chosen keeping in mind they will last only for a time, so none of them should prevent us to easily move to another one.

One of the first changes we did was to move from Bottle to hug. Mainly for the API versioning support, and parameters specifications. After some custom developments, we added automatic documentation in OpenAPI format, meaning developers no longer need to update a standalone document describing the API.

At that point, hug came with a strong constraint: moving to python3, while all the code was in python2. But once more, it sounded like a natural evolution, given the fact that python2 will be deprecated in January 2020.

External reusable modules

As the internal architecture was heavily modified, moving from multiple repositories to a single one, we also factorized those parts that could be factorized.

We released a python module named irma-shared that helps using the API, as it contains constants and marshmallow serialization schemas for all API objects.

We had already published a simple python module to interact with our API, called irmacl for irma command line, it has now evolved into irmacl-async and as you could guess, it is now based on asyncio the python asynchronous I/O library.

Healthier releases

If you have access to our Changelog, you may have noticed its format changed. It is directly linked to the way our new releases are produced. We are now using semantic release, that basically, checks commits on a branch since the last tag, and decides whether it will be:

a patch version (only bugfix commits)

a minor version (some new features added)

or a major version (at least one breaking change)

It implies checking the commit messages to be sure they are well formatted. This is the job of commitlint, which ultimately allows to fully automate the creation of a new version.

Another important move was to pin the versions of our dependencies. It is already hard to test Irma in all its possible configurations, not having fixed dependencies makes it almost impossible. As we were also working on an offline installation, being sure that for a given version at a given time the dependencies are fixed allowed us to go back in time, and to replay an installation.

Pinning versions does not mean outdated versions, and this constant work of checking new available versions is delegated to renovate bot. Renovate Bot checks for dependencies, updates the requirements files and automatically opens Merge Requests to let the changes be tested (we use gitlab).

Security

Even if Irma is meant to run on a private network, its goal is to provide companies with an internal solution for file analysis. As such, files should not be accessed without proper permissions, and this motivated all the following work on improving Irma security.

Hardening roles

We recently worked on following security guidelines published by the National Cybersecurity Agency of France ANSSI (in French): firewalling all network accesses, disabling unused accounts, removing unused applications, setting passwords wherever they could be set…

The interesting approach of doing that through Ansible roles, is that it could be easily audited, and that it could be applied in the same way to the running virtual machines and the host.

Public Key Infrastructure

Since v2.1.0, Irma handles the Public Key Infrastructure (or PKI) required for Nginx, RabbitMQ and PostgreSQL TLS support. It allows a user to generate self signed certificates, generate certificates from company authority or use external certificates. For Nginx it also handles the generation and revocation of client certificates (something we mainly used to identify client before supporting user authentication in v3.0)

Ciphering of samples

Samples are now stored ciphered with AES-CTR mainly to make sure samples that are potentially harmful files are stored safely (e.g. backup copy) and aren't accidentally opened or executed, not for confidentiality purposes.

Features

Enterprise vs Opensource

If the Github repository does not seem that much active (let's face it), it is mainly for 2 reasons. The first one is that we are trying a strategy with a Core project offering additional features in the Enterprise version. After 2 years with this approach we may (it is still just an option) go back to synchronizing the two product versions.

The second reason is that we were busy developing enterprise sub-projects, mainly Irma Kiosk: a complete USB kiosk client for Irma Core, and Irma Factory: the component in charge of handling offline updates.

Core new features

Since v1.3 a lot of new features appeared:

starting with new probes, mainly new antivirus support: Kaspersky Endpoint Security Linux, ESET file server Linux, DrWeb file server Linux, Trend Micro Server Protect. Now antivirus Probes output their virus database version along with their program version to easily check if files should be rescanned for up-to-date results or not. We also developed a LIEF probe to parse ELF, PE and MachO binaries.

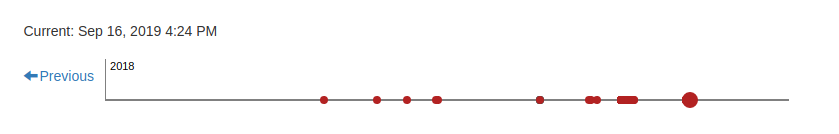

scans timelines that let a user see and browse all prior analyses of a given file. Each dot on the timeline represents an analysis, its color is either red or green according to the analysis result. See an example below:

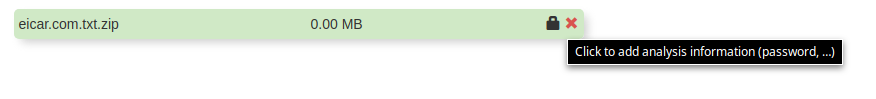

support for archives with password. The password is asked interactively, and the administrator can also enter a list of passwords that will be automatically tried (the industry standard infected for example). The user sends an archive password through the add analysis information, see below:

file export to a password-protected zip file, to prevent accidental contamination instead of downloading the raw samples.

search was also improved and now allows a user to search files by analysis status or by virus name.

user authentication was added in v3.0, users get a given list of permissions, which grant access to a given set of API endpoints, and they can only access their own files.

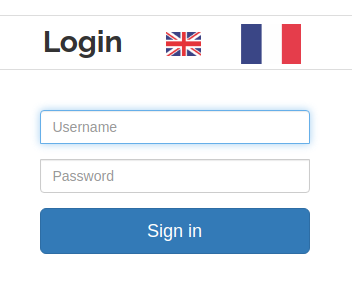

(As you may have guessed from the flags, we also worked on internationalisation aka i18n.)

What's next

Enterprise features

The natural follow-up of our user authentication system is to have a real Role Based Access Control (RBAC) with support for enterprise authentication systems. Another ambitious goal for us will be to create from scratch a brand new User Interface, the current one has not changed since version 1 and is showing its age.

Analysis capabilities

Our goal is to push Irma towards a generic file analysis orchestrator. In order to achieve that we plan to first add some new kinds of analyses: sanitizing probes that will let Irma display previews of documents and that could completely change our use cases, and get more advanced results by adding dynamic analysis probes and binary similarity detection probes.

Afterwards we will offer to the user a way to customize the analysis workflow according to the use case, the type of file analysed, or the list of probes available.

Conclusion

A lot happened in Irma since my last blog post in 2016, some core components replaced others, we streamlined the dependency maintainance, testing and release process, improved the documentation, extended support for more probes and added many new features, and we have many plans and expectations for the future.

If you are interested in practical use cases or just want to know more about Irma, we will hold a Webinar in French on the 26th of November on how to automate file analysis. You can register here.