Authors Dahmun Goudarzi, Damien Aumaitre, Ramtine Tofighi Shirazi

Category Software

Tags audit, confidential computing, software, sgx, 2023

Mithril Security engaged a collaboration with Quarkslab to perform an audit of the BlindAI-preview, now known as BlindAI Core, which is an open-source confidential computing solution for querying and deploying AI models while guaranteeing data privacy. The goal of the audit was to evaluate the BlindAI resiliency based on the definition of a threat model after a refresh on the latest state-of-the-art.

Introduction

BlindAI is an AI inference server with an added privacy layer that aims to protect the data sent to models. The main objective is to leverage state-of-the-art security to benefit from AI predictions, without putting users' data at risk. For that purpose, BlindAI is built on top of the hardware protection provided by Intel SGX, to provide end-to-end data protection.

BlindAI overview

BlindAI is based on a Rust server that have Intel SGX hardware. Thanks to this specific set of instructions provided by Intel, BlindAI can provide a space, called enclave, where the AI inference can be made securely and privately.

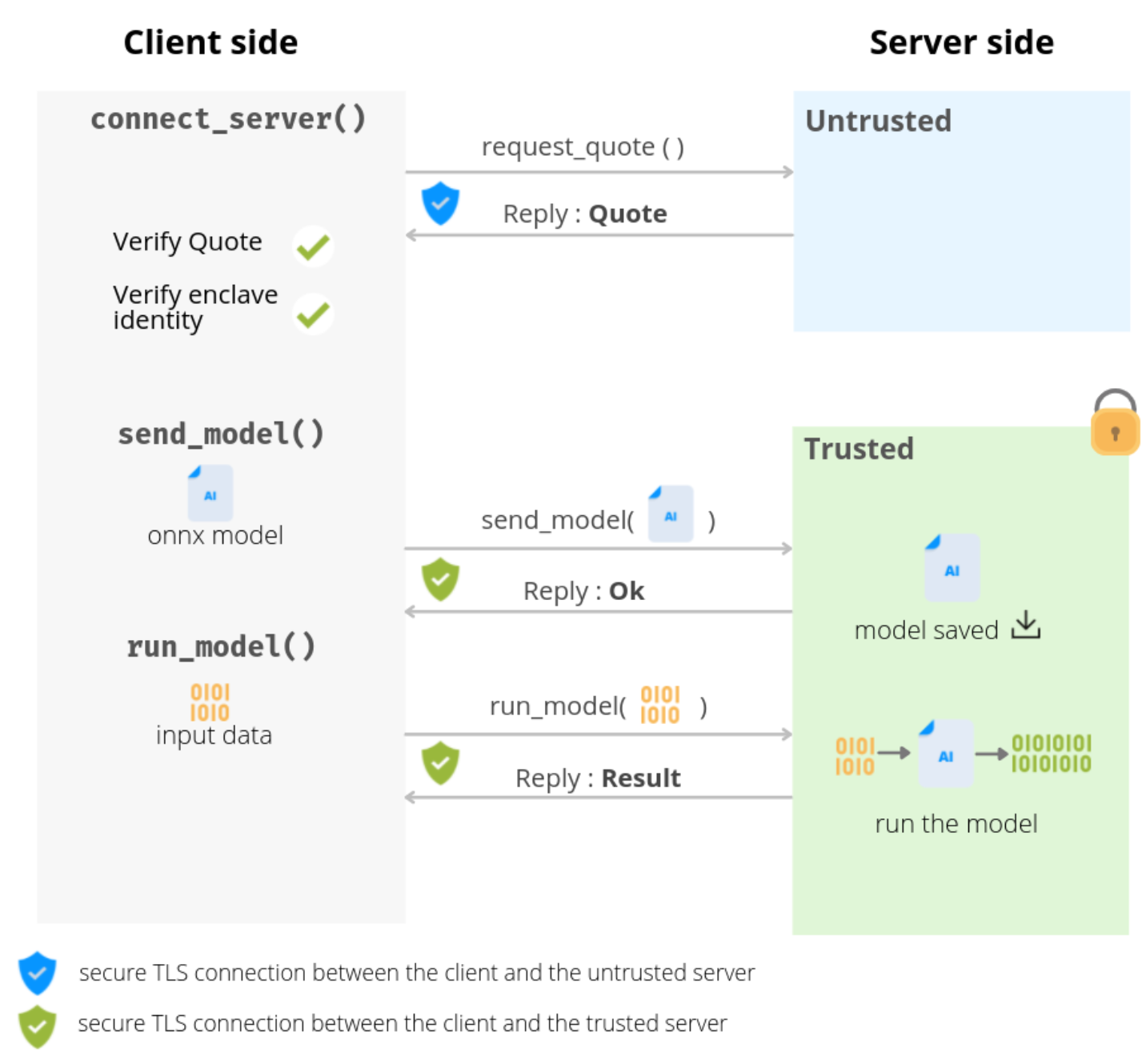

To interact with the enclave, BlindAI provides as well a Python-based client, which allows a user to upload, run, and delete models on the server, after establishing secure communication channels to the server via TLS.

The entire client-server protocol of BlindAI can be seen as follows:

Please note that this overview is taken from a previous version of BlindAI-preview documentation, but the overall design rationale is still relevant.

Scope

The goal of the audit was to define the relevant threat models to the BlindAI, and to perform security testing of the server-side components based on the latter and within an allocated time frame. To that end, the following scope of work was defined and agreed upon by Mithril Security and Quarkslab:

- Step 1: study of state-of-the-art attacks on Intel SGX, and more broadly in the confidential computing area;

- Step 2: define a threat model and refine a test plan relevant to BlindAI;

- Step 3: security assessment and testing of the BlindAI based on the previous steps;

Quarkslab's auditors performed a review of the state-of-the-art on Intel SGX recent attacks and related tools. Moreover, a threat model was defined in order to provide Mithril Security with priorities in terms of threats to cover as the BlindAI project evolves, including Man-in-the-middle and Man-at-the-end attacks.

The full report of the assessment can be found in the BlindAI GitHub repository. A blogpost by Mithril Security related to the audit can also be found here.

Findings

The table below summarizes the findings of the audit. A total of 8 security findings were identified, with 1 vulnerability rated as low and 7 security weaknesses considered as informative only.

| ID | Description | Category (CWE) | Severity |

|---|---|---|---|

| LOW 1 | BlindAI does not have a mechanism to offload to the disk when memory consumption is high, which makes a denial-of-service attack possible when multiple models are sent. | CWE-345: Insufficient Verification of Data Authenticity | Low |

| INFO 1 | Enclaves are unsigned prior to running which is not compliant with Fortanix EDP documentation. | CWE-770: Allocation of Resources Without Limits or Throttling | Info |

| INFO 2 | One of the dependencies used by the enclave, `rouille` seems to have some issues. | CWE-1104: Use of Unmaintained Third Party Components | Info |

| INFO 3 | The code used in `BlindAI` does not properly handle errors or exceptional conditions. | CWE-703: Improper Check or Handling of Exceptional Conditions | Info |

| INFO 4 | Some functions are using `unwrap` (which can cause a panic) when they are supposed to handle errors. | CWE-676: Use of Potentially Dangerous Function | Info |

| INFO 5 | When the runner requests a quote from the quoting enclave, it uses a nonce value set to 0 which is not a good practice. | CWE-323: Reusing a Nonce, Key Pair in Encryption | Info |

| INFO 6 | Client uses `rapidjson` from Tencent which is known for not taking security issues. | CWE-1357: Reliance on Insufficiently Trustworthy Component | Info |

| INFO 7 | Usage of `CBOR` to encode data sent and received by the enclave which not maintained anymore. | CWE-1104: Use of Unmaintained Third Party Components | Info |

Conclusion

We reviewed the state-of-the-art of Intel SGX technology, attacks and the related modules linked to the BlindAI. Based on the latter, a cartography and threat model were performed in order to focus the security audit on relevant items within the allocated time frame.

Using the defined security perimeter and tests, the audit unveiled some low and mostly informative issues in the codebase, but nothing critical or exploitable in the end.

However, the client of the BlindAI preview should also be hardened as, despite being considered out-of-scope for this audit, it can easily become an entrypoint for malicious actors.

Overall, Quarkslab would like to thank Mithril Security's team for this interesting and fruitful collaboration.